A client of Cre8d Design had been experiencing a number of SEO and marketing related issues. Specifically, the client was seeing a reduced presence in Google after ranking at the top of the search results for years.

And second, while prospects continued to contact the client via web forms, many of these people weren’t responding to the client’s call backs or emails.

In August 2016, Cre8d Design’s Rachel Cunliffe recommended the client contact Huff Industrial Marketing for an SEO and website audit.

When Googling isn’t the answer

One reason the client believed search engine rankings had been affected was due to a practice many of us engage in: Googling our company to see where we are in the search results.

This practice is problematic for many reasons.

One reason is due to our own search history. The more you search for a particular thing, the more Google serves you results that match what it thinks you’re looking for. By regularly conducting searches for your company and associated keywords, Google will show your company first because it knows it’s what you’re looking for.

Your physical location and the device you use also play a role, as do other factors, but suffice to say, if you’re Googling your company and conclude your SEO program is “fine” because you’re coming up at the top for your searches, this data could be highly inaccurate.

It can also work in reverse: you can Google your company or keywords and not see your company in the search results — and thus conclude your SEO “isn’t working.”

And, if your site has enjoyed high rankings for years, seeing a fall off can give rise to panic — because lower rankings mean less traffic, which means less business. This is exactly what Cre8d’s client was experiencing when she Googled her keywords and noticed her rankings had dropped considerably.

Step #1: Look at Google Analytics traffic patterns

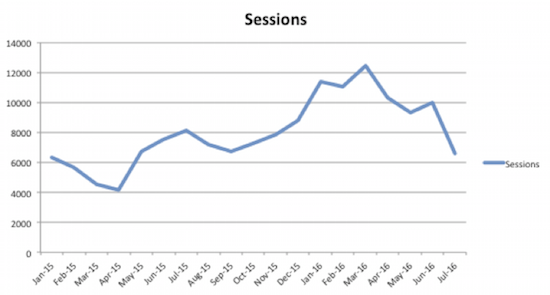

I generally like to look at sessions data in various ways: YTD for the last 6, 12, 18 and 24 months and by comparing various periods and segments. This way, I can see “blips” in the traffic patterns.

The problem became clear pretty quickly: the sessions data showed a precipitous drop in traffic beginning in March 2016 after a steady increase through 2015. If traffic kept dropping, it would fall below 2015 levels.

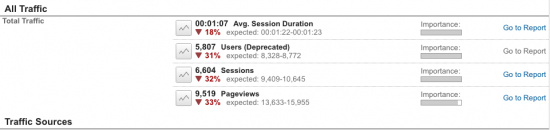

In fact, Google Analytics had issued an alert about the drop in Intelligence Events — as you can see in the next screenshot:

As soon as I saw the data, I immediately thought, “uh oh.” I’ve seen this type of drop before for sites that have been penalized by Google.

Step #2: View Search Console data

Search Console is an amazing tool as it shows the health of a website from a technical perspective, including if Googlebot has indexed pages, whether it’s encountered errors, if the site has contracted malware, etc.

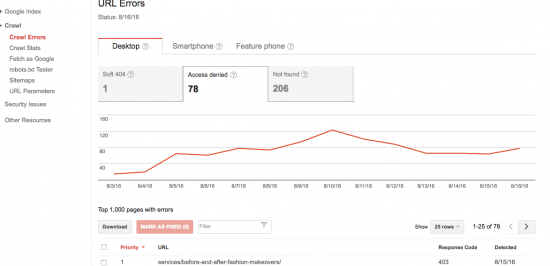

Within the client’s Search Console, one problem was immediately apparent: 403 errors.

A 403 error means access is being denied to a website. You see 403 errors in action when you try to access a website and you get the message, “Access forbidden” and a blank screen.

For the client site, the URL Errors Report showed 78 “access denied” errors.

In addition, the Sitemaps Report showed two warnings, both of which read: “When we tested a sample of the URLs from your Sitemap, we found that some URLs were not accessible to Googlebot due to an HTTP status error. All accessible URLs will still be submitted.”

However, since I could view the website and its pages, and the website was appearing in Google for searches, I didn’t understand the reason for these error messages.

I then contacted Stephen Merriman, Cre8d Design’s programmer / developer, as both the 403 errors and the sitemap warnings were indications of technical issues.

According to Stephen, the client’s web host had added a huge amount of code in the .htaccess file that was blocking various robots and scrapers. This code included some lines dedicated to Googlebot where it appeared the host was blocking certain IPs from them.

Why blocking Googlebot can affect search engine rankings

According to a post by Cognitive SEO, .htaccess code that blocks Googlebot can cause a huge drop in rankings — and you won’t even know why!

That’s because .htaccess is a configuration file for use on web servers running the Apache Web Server software. It’s something you as a business owner or marketer don’t usually see or even know exists — thus, you’d never check it for technical issues relating to SEO. (To learn more about .htaccess, see: .htaccess-guide.com.)

But, .htaccess is incredibly important because, as Cognitive SEO post author Razvan Gavrilas writes, “Google has made it clear that blocking their access to resources on your website can detrimentally impact how well your site ranks. And lower rankings mean less traffic and conversions — so a pretty compelling reason to conform. Ideally, you shouldn’t be blocking any resources from Googlebot.”

Once Rachel saw Stephen’s reply and the traffic chart, she and Stephen immediately put things together: the drop in traffic began in March -– which is when the client had begun receiving notices from the web host that the website was using “too many resources.”

Bottom line: the website host was blocking Googlebot from fully accessing the website — which was why the site’s traffic was dropping and hence, its Google rankings.

Recommendation: Change web hosts, immediately

The client had been doing business with the web host for years and hadn’t wanted to change; however, the web hosting company had increased the client’s monthly hosting fee to accommodate the rise in “resources used” — all the while blocking the important Googlebot.

Seeing the data made the issue clear: yes, it’s time to change. Working with Cre8d Design, the client changed hosts within 48 hours. As part of the process, Stephen cleaned out the offending code from the .htaccess file.

Troubleshooting the client’s two-step inquiry process

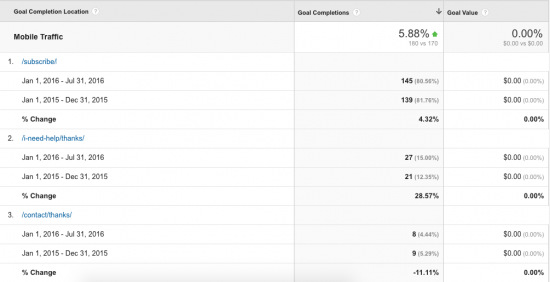

After analyzing the client’s traffic and search query data (found in Search Console) and the goal conversion data in Analytics, it became clear that while traffic had dropped, prospects were finding the company using dozens of search queries . . . and they were filling out the website form/s.

Even more interesting, while traffic was evenly divided between mobile and desktop, mobile had actually increased for 2016 — and it was driving conversions, as you can see in the next screenshot.

The problem, it seemed, was with the client’s two-step inquiry process.

Upon receiving the form submission, the client would email the prospect to set up a call — a process which often involved a lot of back and forth email. Sometimes prospects wouldn’t respond to the client’s emails; other times, a call would be scheduled but the prospect wouldn’t answer the phone.

What the data said to me and to Rachel is that a disconnect existed between the time a prospect filled out a form and the time the client responded to it — even if the client responded within minutes!

To solve this problem, Rachel suggested the client use Acuity Scheduling, a call booking software. This software allows users to incorporate a form and call booking functionality into one step — thus eliminating the two-step process and all the back and forth emails.

What I liked about this recommendation is that it took into account the website’s mobile users — and the fact that they could be anywhere when viewing the client website and clicking on the calls-to-action.

With Acuity, prospects could easily book calls no matter the device being used or their physical location (doctor’s office, carpool line, public transit commute, etc.) and then easily add the booked event to their calendar.

I recommended the client change the copy on the the call-to-action button to “Book a Call Now” so that it was very clear to prospects the next step they were taking, and then move the call-to-action to the top of pages (versus the footer) so that it was more visible to all users, but especially mobile ones (thus eliminate scrolling through multiple “screens” to find it).

Results to due website audit changes

Once the website was moved to a different host and the Googlebot blocking code removed from the .htaccess file, traffic began increasing again, as did the number of pages Google indexed. Within two weeks, clicks from the Google search results pages to the website had increased by 47%.

Equally as important, Search Console no longer displayed the sitemap warnings, and the number of 403 errors decreased from 78 to 20. (At last check, these were for blog tags; we’re waiting for things to continue to shake out and anticipate these going away at some point).

Even better, one week after installing Acuity, the client closed the first deal after a booked call — and continues to see calls being booked.

For Rachel Cunliffe and myself, this was one of our more satisfying projects. What was most interesting to us is that while the problem was SEO-related, it was a highly technical issue that couldn’t be diagnosed by “Googling” — even though the “symptoms” suggested otherwise. Once properly diagnosed, the problem was easily fixed.

For me, it brought home the fact that successful online marketing involves a qualified team of people who complement each other: a developer / programmer, a designer / consultant, and a marketer / consultant.

Each of us has our respective specialty and expertise, but like a Venn diagram, we also overlap. It’s this overlap that leads to continued success — for us and for our clients.

If you’re not sure how your website is doing “SEO-wise,” or if you’d like to talk about hiring Huff Industrial Marketing to help you better manage your marketing and SEO efforts — and see improved results over time — book a call with me to discuss. Or, simply pick up the phone and call: 603-382-8093.